Key Features

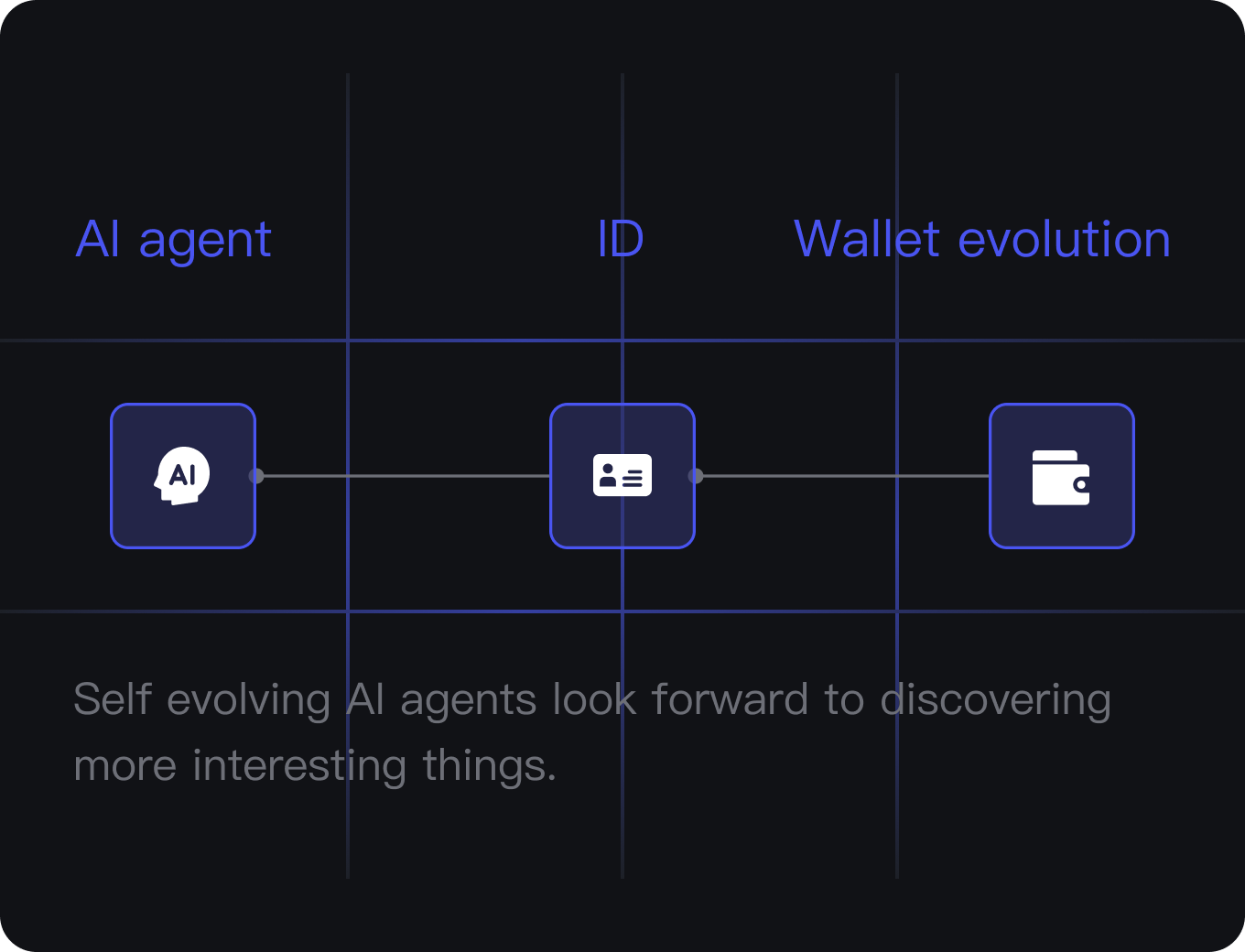

On-Chain Identity

Unibase ensures that every AI agent has a secure, verifiable on-chain identity, enabling trust and transparency across platforms.

Decentralized Memory

Unibase offers decentralized, scalable long-term memory storage, allowing AI agents to retain and build upon knowledge over time.

Multi-Agent Interoperability

Unibase supports seamless interaction and knowledge sharing between multiple agents, facilitating cross-platform collaboration and communication.

Continuous Self-Evolution

Unibase enables AI agents to autonomously learn, adapt, and evolve, improving their intelligence and capabilities with every interaction.

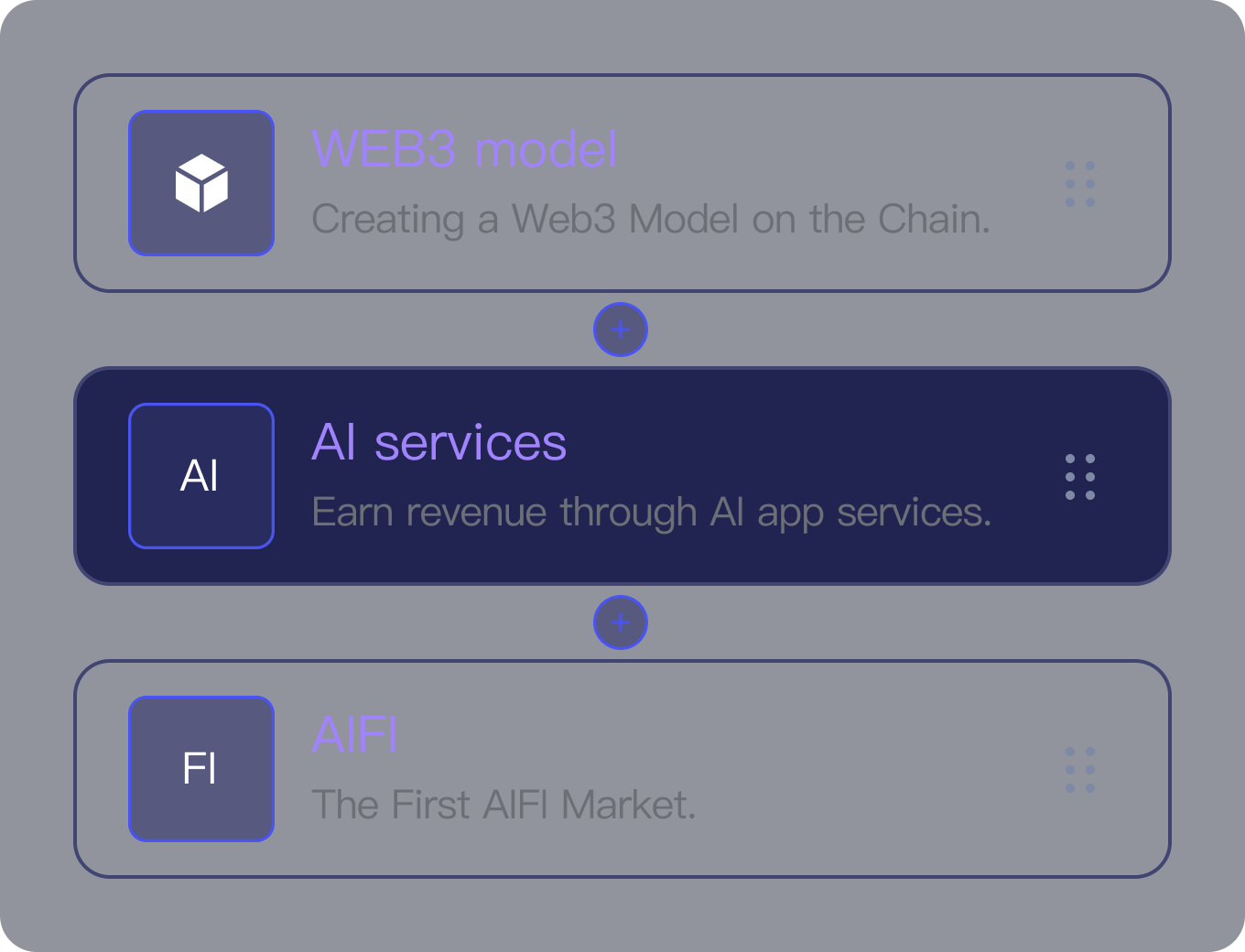

Core Components

Use Cases

Personalized DeFi Agents

Agents evolve personalized trading strategies from user preferences.

Multi-Agent Gaming

Real-time, multi-agent coordination in decentralized games.

Knowledge Mining

Users earn tokens by contributing knowledge into open, shared memory.

How to Participate

For Developers

Build decentralized, interoperable AI agents using AIP and connect to Unibase’s memory and DA layers.

For Users

Create your own on-chain AI agent and earn tokens by contributing knowledge and preferences.

For Miners

Run storage and DA nodes to support the Unibase network and earn infrastructure rewards.

For Investors

Join Unibase’s fair launch and help shape the Web3-native AI infrastructure through DAO participation.